publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

- TPAMI

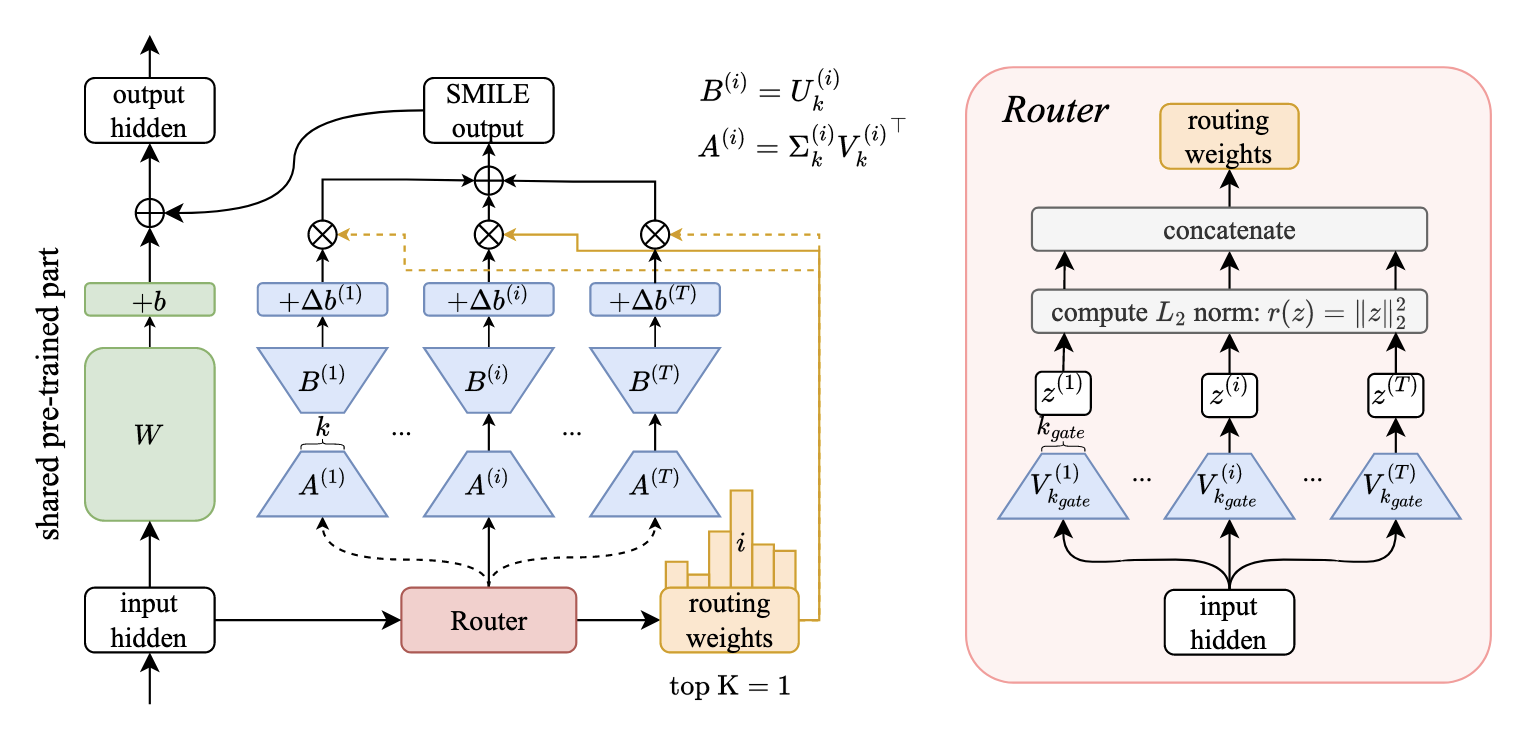

Zero-Shot Sparse Mixture of Low-Rank Experts Construction From Pre-Trained Foundation ModelsIEEE Transactions on Pattern Analysis and Machine Intelligence, 2025

Zero-Shot Sparse Mixture of Low-Rank Experts Construction From Pre-Trained Foundation ModelsIEEE Transactions on Pattern Analysis and Machine Intelligence, 2025 - IJCVData-adaptive weight-ensembling for multi-task model fusionInternational Journal of Computer Vision, 2025

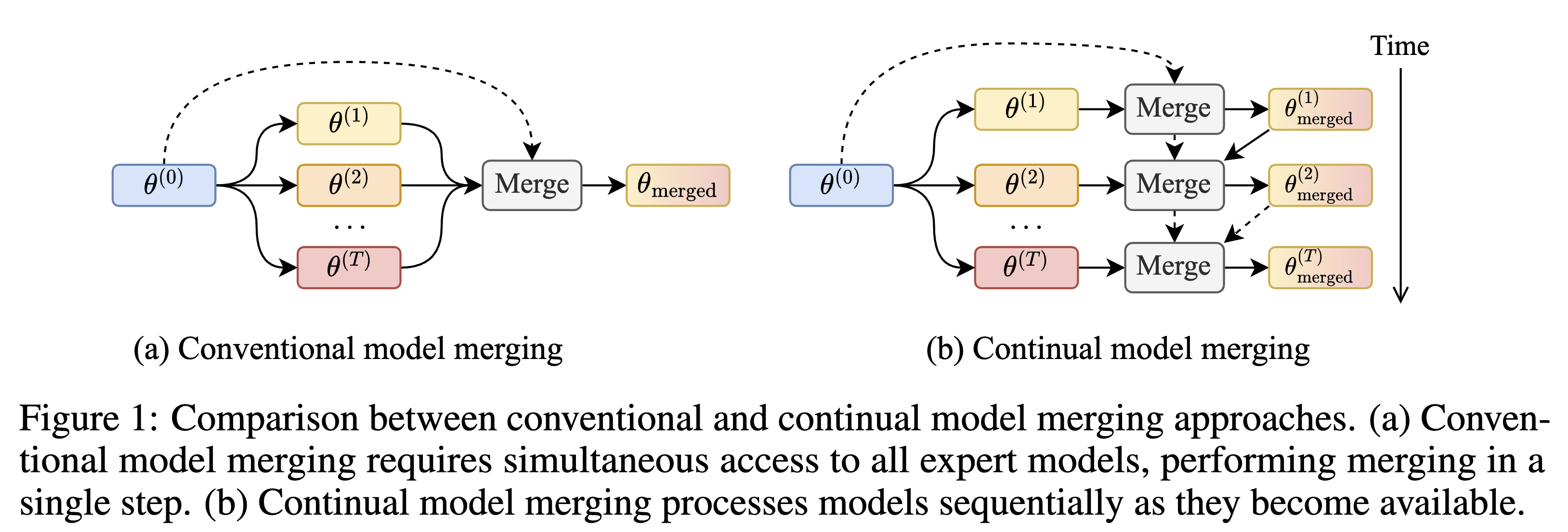

- NeurIPSContinual Model Merging without Data: Dual Projections for Balancing Stability and PlasticityThe Thirty-Ninth Annual Conference on Neural Information Processing Systems, 2025

- NeurIPSMix Data or Merge Models? Balancing the Helpfulness, Honesty, and Harmlessness of Large Language Model via Model MergingThe Thirty-Ninth Annual Conference on Neural Information Processing Systems, 2025

- ICMLTargeted Low-rank Refinement: Enhancing Sparse Language Models with PrecisionIn Forty-second International Conference on Machine Learning, 2025

- ICMLModeling Multi-Task Model Merging as Adaptive Projective Gradient DescentIn Forty-second International Conference on Machine Learning, 2025

- ICLRMitigating the Backdoor Effect for Multi-Task Model Merging via Safety-Aware SubspaceIn The 13th International Conference on Learning Representations (ICLR), 2025

- NMI

2024

- Fusionbench: A comprehensive benchmark of deep model fusionarXiv preprint arXiv:2406.03280, 2024

- Towards efficient pareto set approximation via mixture of experts based model fusionarXiv preprint arXiv:2406.09770, 2024

- Efficient and effective weight-ensembling mixture of experts for multi-task model mergingarXiv preprint arXiv:2410.21804, 2024

- ICML

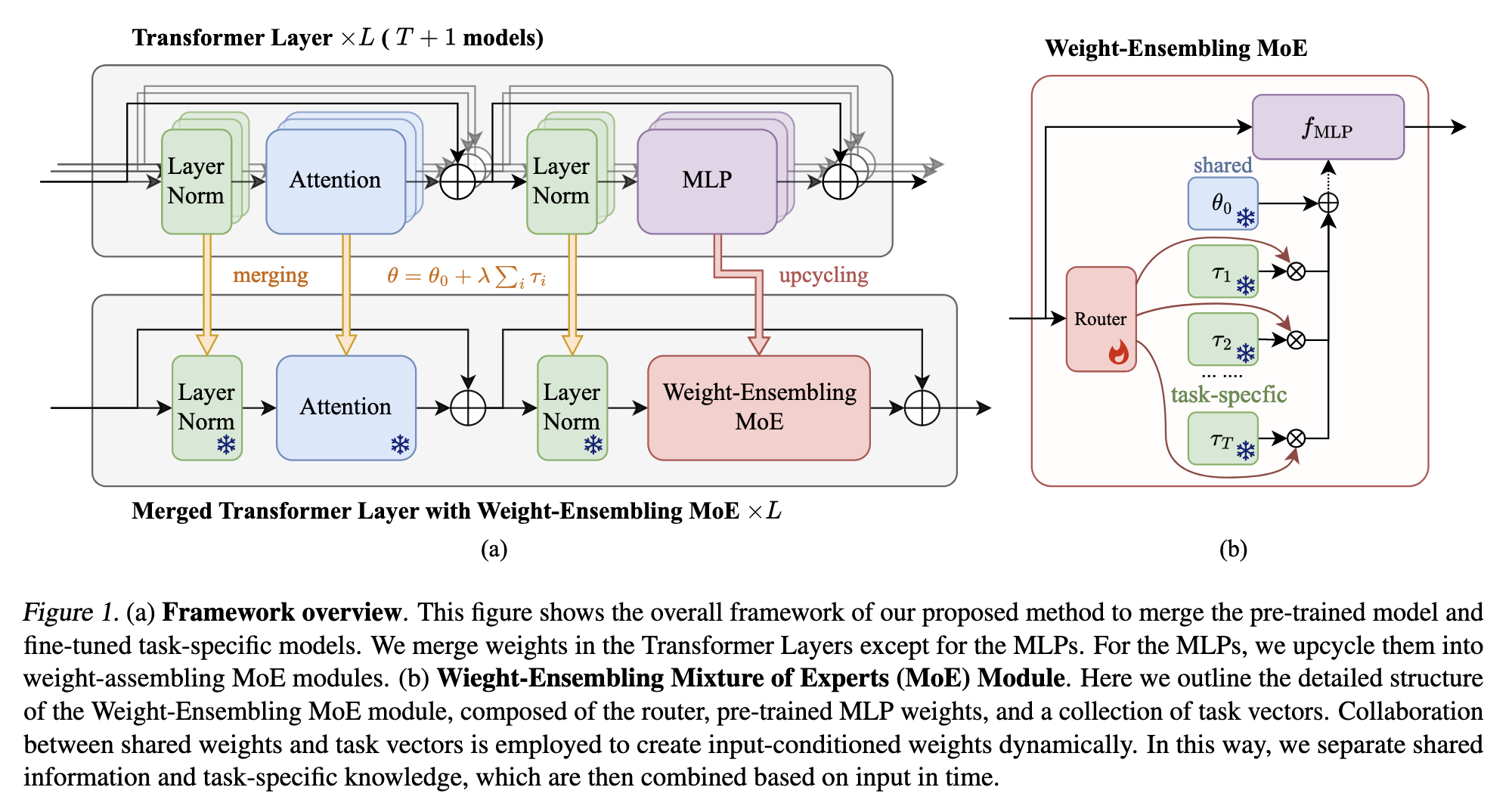

Merging Multi-Task Models via Weight-Ensembling Mixture of ExpertsIn The 41th International Conference on Machine Learning (ICML), 2024

Merging Multi-Task Models via Weight-Ensembling Mixture of ExpertsIn The 41th International Conference on Machine Learning (ICML), 2024 - ICLRParameter efficient multi-task model fusion with partial linearizationIn the 12th International Conference on Learning Representations, 2024

2023

- Concrete subspace learning based interference elimination for multi-task model fusionarXiv preprint arXiv:2312.06173, 2023

- IJCAIImproving Heterogeneous Model Reuse by Density EstimationIn Thirty-Second International Joint Conference on Artificial Intelligence, 2023