Anke Tang

Ph.D Student. Machine Learning. AI Research.

School of Computer Science

Wuhan University, China

I am currently pursuing my Ph.D. degree at the School of Computer Science, Wuhan University, under the supervision of Prof. Yong Luo and Assoc. Prof. Shen Li. I received my Bachelor degree at the School of Physics and Technology, Wuhan University in 2020. My research interests include machine learning, transfer learning, and multi-task learning.

news

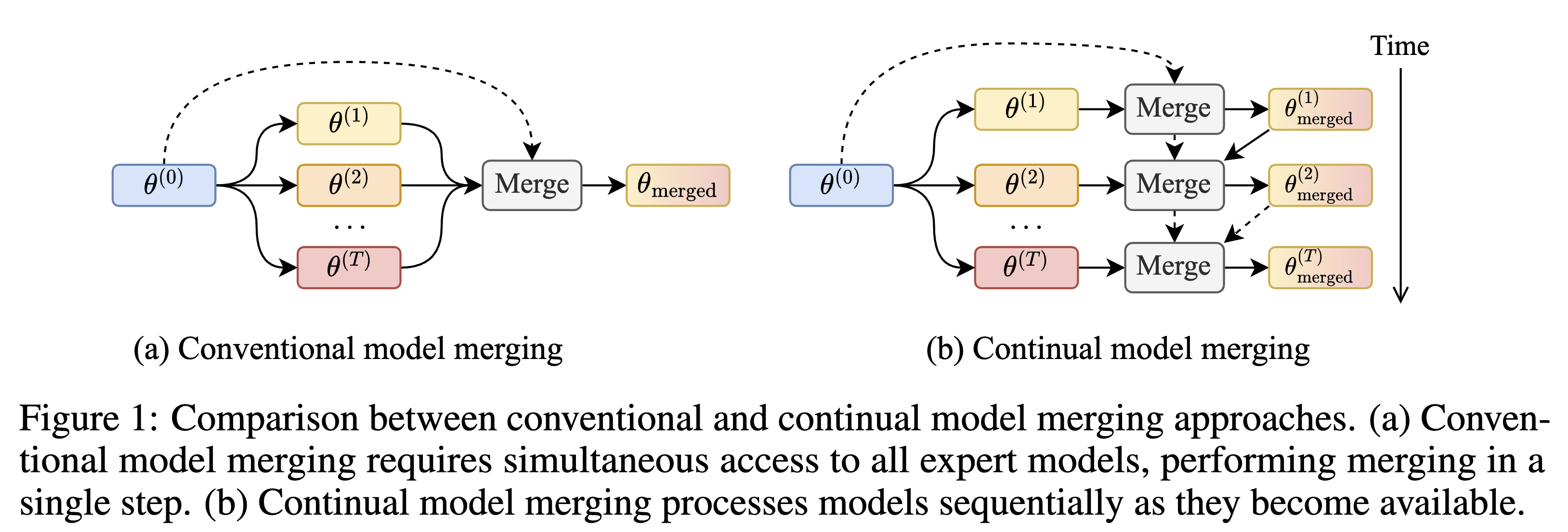

| Sep 20, 2025 | Three of our papers have been accepted to NeurIPS 2025, with two on continual model merging. |

|---|

selected publications

- TPAMI

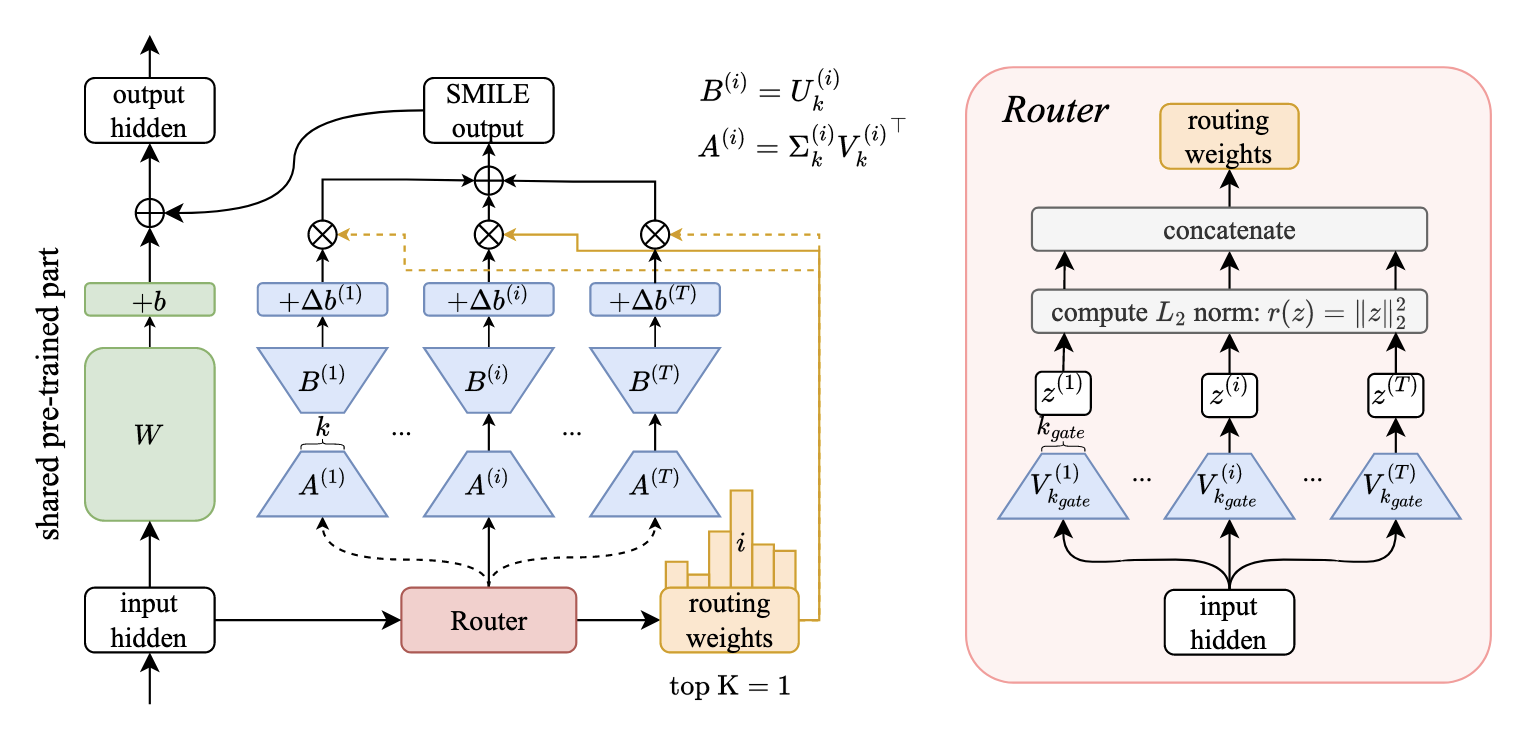

Zero-Shot Sparse Mixture of Low-Rank Experts Construction From Pre-Trained Foundation ModelsIEEE Transactions on Pattern Analysis and Machine Intelligence, 2025

Zero-Shot Sparse Mixture of Low-Rank Experts Construction From Pre-Trained Foundation ModelsIEEE Transactions on Pattern Analysis and Machine Intelligence, 2025 - IJCVData-adaptive weight-ensembling for multi-task model fusionInternational Journal of Computer Vision, 2025

- NeurIPSContinual Model Merging without Data: Dual Projections for Balancing Stability and PlasticityThe Thirty-Ninth Annual Conference on Neural Information Processing Systems, 2025

- ICMLTargeted Low-rank Refinement: Enhancing Sparse Language Models with PrecisionIn Forty-second International Conference on Machine Learning, 2025

- ICMLModeling Multi-Task Model Merging as Adaptive Projective Gradient DescentIn Forty-second International Conference on Machine Learning, 2025

- ICLRMitigating the Backdoor Effect for Multi-Task Model Merging via Safety-Aware SubspaceIn The 13th International Conference on Learning Representations (ICLR), 2025

- NMI

- ICML

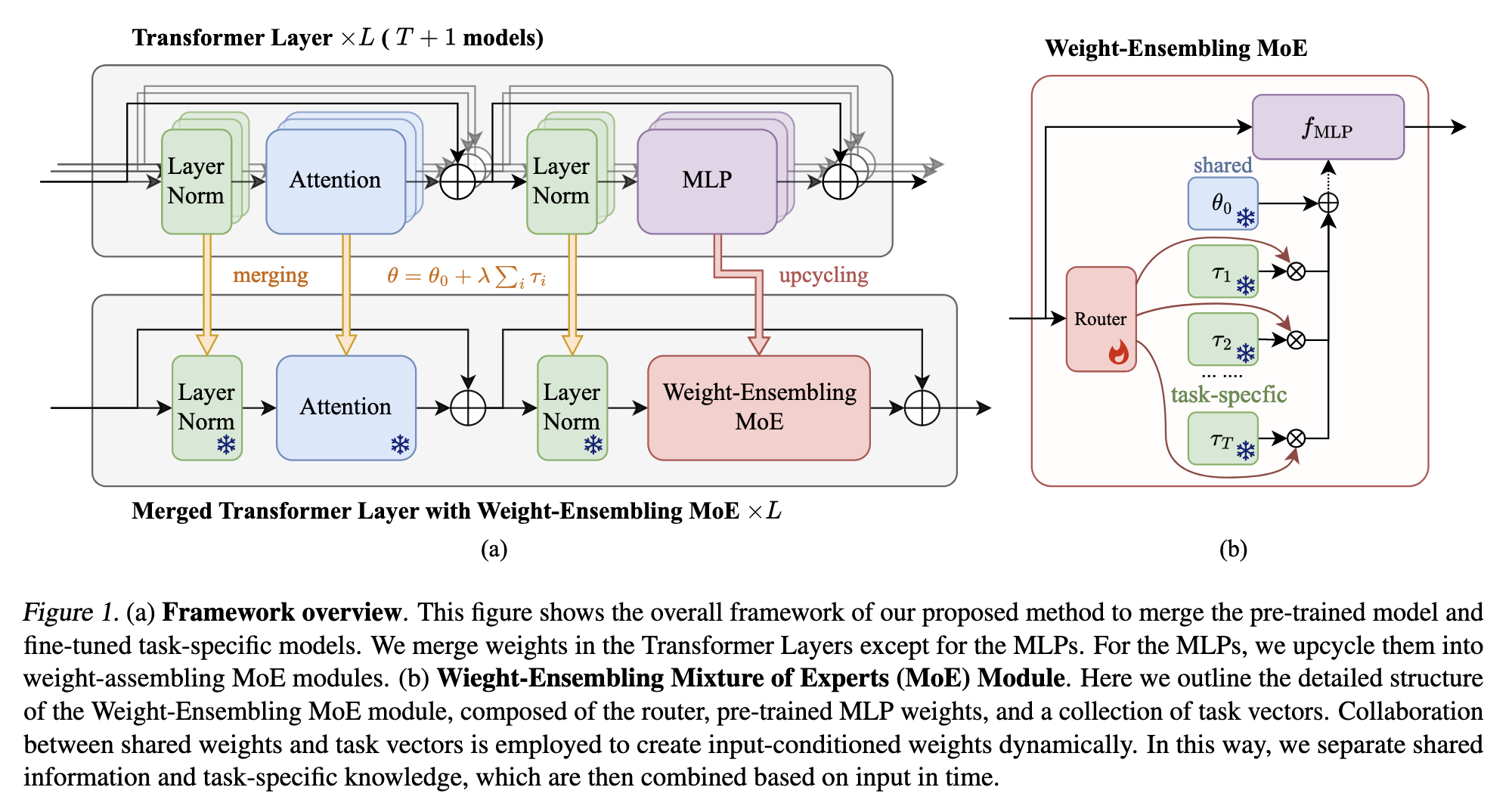

Merging Multi-Task Models via Weight-Ensembling Mixture of ExpertsIn The 41th International Conference on Machine Learning (ICML), 2024

Merging Multi-Task Models via Weight-Ensembling Mixture of ExpertsIn The 41th International Conference on Machine Learning (ICML), 2024 - ICLRParameter efficient multi-task model fusion with partial linearizationIn the 12th International Conference on Learning Representations, 2024

- IJCAIImproving Heterogeneous Model Reuse by Density EstimationIn Thirty-Second International Joint Conference on Artificial Intelligence, 2023